Physicists and mathematicians at the Universities of Portsmouth and Central Lancashire in the U.K., have discovered a new law of physics that could predict genetic mutations in organisms, including viruses, and help judge their potential consequences. The new law—the second law of information dynamics (“infodynamics”)—could have a significant impact on research on the genome, evolution, computation, big data, physics, and cosmology.

The scientists present their deduction of the new law in an article titled “Second law of information dynamics,” in the journal AIP Advances published on July 11, 2022. Melvin Vopson, PhD, an information physicist and senior lecturer of mathematics and physics at the University of Portsmouth is the lead author of the study. Vopson conducted the study in collaboration with Serban Lepadatu, PhD, lecturer in physics at the University of Central Lancashire.

“The entropy of the universe tends to a maximum,” Rudolf Clausius, a 19th century German scientist had said. This second law of thermodynamics stipulates that the entropy or chaos of an isolated system increases or stays constant over time.

“In physics, there are laws that govern everything that happens in the universe—how objects move, how energy flows. Everything is based on the laws of physics,” said Vopson whose research explores information systems—from motherboards and discs in computers to RNA and DNA genomes in organisms.

The irrefutable second law of thermodynamics is linked to the unidirectional march of time. “Imagine two transparent glass boxes. In the left you have red smoke and on the right, you have blue smoke, and in between them is a barrier. If you remove the barrier, the two gases will mix, and the color will change,” Vopson explains. “There is no process that this system can undergo to separate by itself into blue and red again. You cannot lower the entropy or organize the system to how it was before, without expending energy.”

Based on the universal second law of thermodynamics, Vopson had expected that the entropy in information systems also would increase over time, but on examining the evolution of information systems they realized the disorder of information systems with the passage of time behaves counterintuitively.

“What Dr. Lepadatu and I found was the exact opposite—it decreases over time. The second law of information dynamics works exactly in opposition to the second law of thermodynamics!”

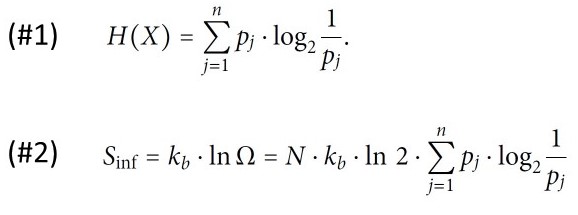

Information systems are defined as physical systems that contain information states as per Shannon’s information theory framework postulated in 1948. However, ‘Shannon’s Information Entropy’, should not be confused with ‘Overall Information Entropy’ as defined in the current study, cautions Vopson.

“Shannon’s Information Entropy measures the information bit content per event of the system observed, while the [Overall] Information Entropy measures the physical entropy component created by the information states within the system,” explained Vopson, indicating that the two are different but bear a mathematical relation defined by a “product equation” where Overall Information Entropy is the product of Shannon’s Information Entropy and other terms.

Vopson and Lepadatu found that the Overall Information Entropy as defined in equation #2, decreases as the time passes.

“This can only take place if N (total number of events / information states) decreases, or H(X), Shannon information entropy, decreases over time,” explains Vopson. “It turns out that digital information systems display a decrease of N over time, while biological systems, where N is preserved (same size DNA or RNA), the H(X) decreases over time via genetic mutations. This is why I regard this as a universal law.”

Using two radically different systems of information–digital and a biological—the authors show the second law of infodynamics requires information entropy to remain constant or to decrease over time. Vopson and his team analyzed genomes of the COVID-19 virus SARS-CoV-2 and found that their information entropy decreased over time.

“The best example of something that undergoes a number of mutations in a short space of time is a virus. The pandemic has given us the ideal test sample as SARS-CoV-2 mutated into so many variants and the data available is unbelievable,” said Vopson. “The COVID data confirms the second law of infodynamics. This research opens up unlimited possibilities. Imagine looking at a genome and judging whether a mutation is beneficial before it happens.”

Vopson claims this new law could be what drives genetic mutations in biological organisms. The prevailing view in evolutionary biosciences is that mutations occur at random and those mutations that confer some survival benefit for an organism, persist.

“But what if there is a hidden process that drives these mutations? Every time we see something we don’t understand, we describe it as ‘random’ or ‘chaotic’ or ‘paranormal’, but it’s only our inability to explain it,” contends Vopson. “If we can start looking at genetic mutations from a deterministic point of view, we can exploit this new physics law to predict mutations – or the probability of mutations—before they take place.”

Technology developed based on this new perspective could be game-changing for the gene therapy, pharmaceuticals, evolution, and research on infectious diseases.