An Essential Approach for Translational Proteomics

<Sponsored Content>

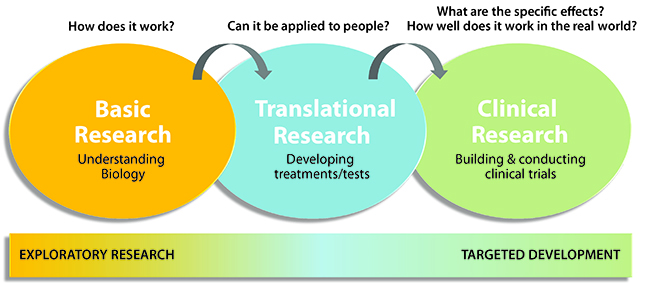

We are in a new age of clinical research, where experimental design is moving from relying on cohort sizes of 2×2 and 10×10 for putative biomarker panel identification to hundreds or even thousands of samples. A new focus has been put into setting up the experimental design for translational studies and the resulting concepts that can then be transferred across labs and projects. The amount of time and cost associated with large-scale studies mandate high-quality data acquisition for every project.

Scientific Challenge

Translational and clinical research studies profile individuals against a cohort to mine for the presence of putative biomarkers. Study size has grown tremendously over the last five years to include hundreds and even thousands of samples. Increasing sample numbers for these studies create significant challenges when successfully assessing an original experimental hypothesis due to:

• Study duration, which can span potential interruption of data acquisition.

• Amount of data generated, which becomes challenging to process and interpret.

• Experiment set-up or method development, particularly within a consortium where transferability becomes critical for reproducibility.

Adding to these challenges, current analytical methods create a gap from bench studies to clinical application and from small to large-scale experiments. Quality control (QC) standards are not used correctly or tend to be tailored for specific applications and thus cannot be shared. With failure to assess reproducibility at each step of the analysis, methods cannot be verified by other labs. This issue directly causes the inability to bridge the translational gap and has resulted in circumstances where bench studies don’t make it to the clinic.

Approach to proteomic biomarker studies

Approach to proteomic biomarker studies

Solution for Translational Proteomics

The use of an optimized, systematic, and standardized approach to proteomics experiments permits the direct comparison of results across experiments, projects, and laboratories.

Identifying and evaluating each step within a workflow allows the inclusion of QC steps along the way. Incorporating standardized QC into sample collection and storage, sample preparation, system suitability testing, and sample analyses ensures data integrity, and also reproducible analyses. Commercially available standards that are externally validated, support this uniformity across workflows.

Once QC methods are in place, system suitability and performance can be measured within the study as well as post-study and determine systematic and experimental variance to facilitate more accurate quantification of biological variance. Successful QC methods can then be qualified and further implemented into subsequent translational studies on biological systems.

Future Plans

Harmonization of QC methods across studies has been observed with new studies in targeted metabolomics. Using systems such as Biocrates Absolute IDQ p180 that include calibration standards, QC Standards, integrated software, and clear QC metrics provide reliable QC tested commercial standards that can be applied to any analytical method related to metabolomics studies.

Steps toward reproducible quantitative analyses within and across labs implementing common QC samples, analytical standards such as SIL peptides, and biological QC standards such as reference pools of serum/plasma from NIST and Golden West, are improving experimental results and providing a solid foundation for further analysis, ensuring monies are not wasted reinventing the wheel. Further assistance from industry partners such as Thermo Fisher Scientific to help with workflow standardization and QC reporting make it easier for the individual lab to start discovery experiments with a high level of quality to ensure they are meaningful long-term.