Ryan Sasaki Director of Global Strategy ACD/Labs

Emerging standards for instrumentation and data processing promise to improve data retrieval and re-use.

To repeat a characterization provided by the newly formed Allotrope Foundation, “From 50,000 feet, pharmaceutical companies [appear to] produce two things: new medicines and the data that supports the discovery, manufacturing, quality, and efficacy of these medicines. Underpinning every experiment, every scientific decision, and every regulatory submission is data generated by a scientist using an instrument in the laboratory.”1

The data that the quote above refers to is, in general, Small Data. This kind of data isn’t necessarily resonating with the Big Data mantra echoing throughout the pharmaceutical industry. Although Big Data is in the media spotlight, Small Data continues to challenge IT organizations. Left unresolved, fundamental Small Data problems make it difficult to get the most out of scientific data. Used to advantage, however, Small Data can be used to drive decision making in the laboratory.

Analytical data investments by pharmaceutical organizations reach millions of dollars per year and encompass instrument purchases, maintenance, consumable supplies, personnel, etc. Unfortunately, these organizations face significant challenges when it comes to maximizing their returns. Huge strides have been made in the automation and workflow aspects of processing, analysis, and reporting for analytical data; however, one major hurdle is preventing these organizations from maximizing their investments in the generation of analytical data.

Source: ACD Labs

Heterogeneous Instrument Data Formats

Currently, there are over 20 historically important analytical instrument manufacturers, many of which offer more than one instrument for any given purpose—chromatography, mass spectrometry, optical spectroscopy, nuclear magnetic resonance (NMR) spectroscopy, thermal analysis, or X-ray powder diffraction. Besides this abundance of mainstream instrumentation, fit-to-purpose instrumentation is available to scientists and research organizations for specific applications. To complicate the matter yet further, the data generated by all this instrumentation may be visualized, processed, and analyzed with a variety of different software applications, and results may be stored in a variety of proprietary data formats.

At the end of the day, organizations want to be as agile as possible to deal with the vast array of scientific problems they need to tackle. This leads to laboratories consisting of a complex mix of instrument and software applications. And data generated at great expense becomes trapped in a variety of different formats, where it is largely unavailable for retrieval and re-use.

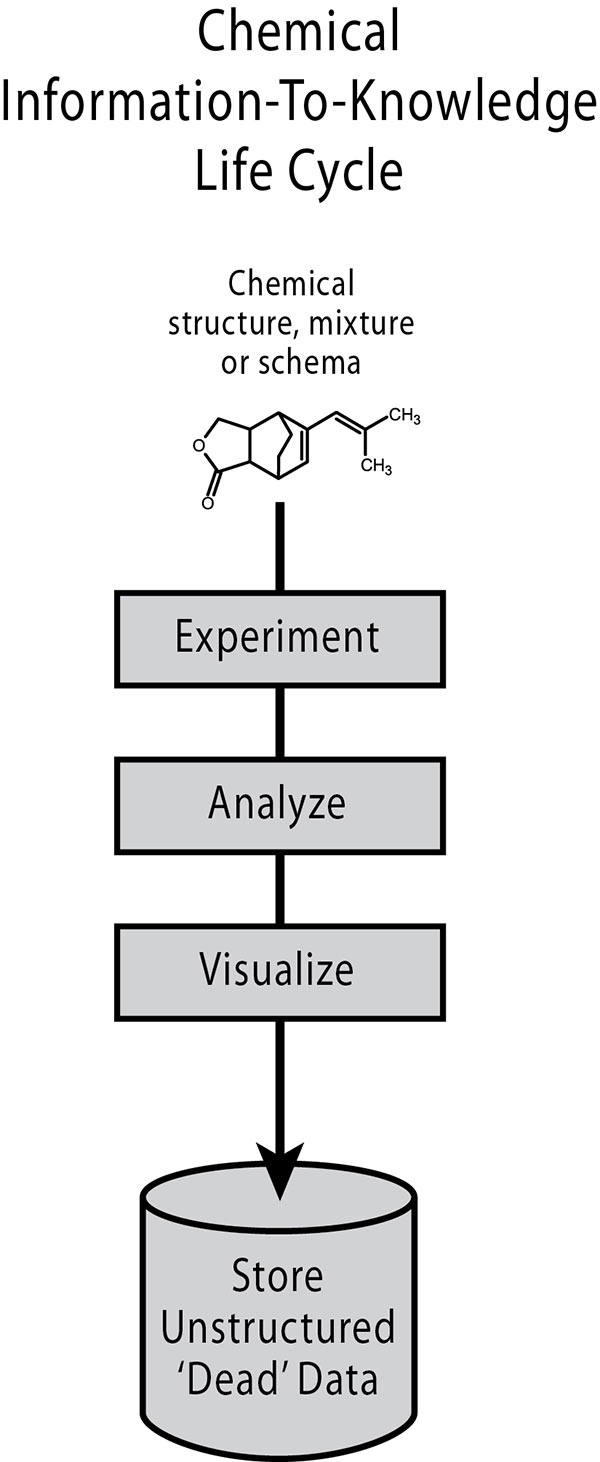

The One-and-Done Data Life Cycle

A major inefficiency that significantly increases the time and cost of chemical R&D is the traditional one-and-done life cycle, where data is captured and essentially frozen as “dead” data in a variety of different formats.2 This data becomes difficult to search and retrieve, and nearly impossible to manipulate and reanalyze.

International Data Corporation (IDC) estimates an enterprise with 1,000 knowledge workers loses a minimum of $6 million a year in the time workers spend searching for—and not finding—needed information.3 As a result, many laboratory experiments represent expensive, time-consuming repeats of previous experiments, simply because the data cannot be found or, if found, cannot be reused. With many IT organizations focused on improved knowledge management initiatives, this data life cycle simply cannot continue if organizations expect to extract more knowledge out of their data sources.

The Drive for Standards

The issues caused by unstructured data have challenged IT organizations in all industries, and many efforts have been made to solve them, including efforts in the area of chemistry. For example, there have been several data format standardization efforts in recent years for spectroscopy and spectrometry data: AND1, JCAMP-DX, SpectroML3, and mzML—to name a few.4

More recent efforts include the Analytical Information Markup Language (AnIML), which boasts an open format for scientific data based on a neutral XML format. The AnIML format is currently in pre-release and is moving through the finalization stages of the balloting process at the American Society for Testing and Materials.

Such efforts have attracted the attention of the Allotrope Foundation, an international association of pharmaceutical and biotech companies dedicated to the building of a “Laboratory Framework” to improve efficiency in data acquisition, archiving, and management. One of the major initiatives that the Foundation is taking on is the attempted implementation of common standards for all analytical data and contextual metadata generated in laboratory workflows.5 The Allotrope Foundation has stressed that it is not a standards body and that this work includes the evaluation and potential adaptation and extension of existing standards such as those described earlier.

While ambitious and very well organized, time will tell if these efforts will be adopted by the different industries that routinely acquire analytical instrument data and whether they can absolve them from the one-and-done data life cycle. A major challenge of these initiatives historically has been receiving full buy-in from the many major hardware and software vendors in the analytical chemistry marketplace who produce the many different proprietary data formats.

The groups behind AnIML and Allotrope have strived mightily to achieve the level of collaboration required to deliver a true industry standard. Regardless of how it is achieved, such a standard will doubtless enable technology solutions; hasten vendor-agnostic analytical data management; and open up a range of possibilities for organizations to increase productivity, save time, improve problem-solving, and bring more new innovations to the marketplace.

Ryan Sasaki is director of global strategy at Advanced Chemistry Development (ACD/Labs), a software firm that specializes in the development of data processing, analysis, and simulation tools that can be managed in a technology-neutral format in a single environment. The ACD/Spectrus Platform can capture and combine analytical data and metadata from a range of analytical instrumentation and combine it with chemical entities to help organizations share and leverage corporate and scientific knowledge from their analytical chemistry workflows.

1 http://iqconsortium.org

2 Ryan Sasaki and Bruce Pharr. Unified Laboratory Intelligence White Paper. February 2013.

3 Susan Feldman and Chris Sherman. The High Cost of Not Finding Information. IDC #29127. April 2003.

4 Burkhard Schaefer. A Fresh Look at the AnIML Data Standard.

5 James Vergis and Dana Vanderwall. Standardizing Data Management. In Analytical and Bioanalytical Testing: s20. BioPharm International. August 2014.