February 1, 2009 (Vol. 29, No. 3)

It Is Not Just Law-Enforcement Agencies Who Are Finding a Panoply of Uses for New Tools

What began as a community workshop nearly 20 years has grown exponentially, as has the field it addresses. In 1989, Promega hosted its first symposium on human identification. “The original goal of the meeting was to facilitate the exchange of ideas between scientists working on methods for DNA analysis,” said Len Goren, global director of Promega’s genetic identity business. “The annual symposium now has an international reputation for providing the forensic community with a forum to learn about new technologies and applications.”

The “International Symposium on Human Identification” held late last year, highlighted advances and applications in the field. Recent advances in technology are driving increased sample throughput and speed. “This is a very exciting time to be in the field,” explained Lenny Klevan, Ph.D., head of forensics at Life Technologies. “DNA forensics is taking hold in the global market—it’s a growing field as law enforcement agencies around the world are using DNA testing as a way to keep their citizens safe.”

DNA forensics is not the sole property of murder and rape cases anymore. “People are now more interested in using DNA to solve less serious crimes,” noted Steven Hofstadler, Ph.D., vp research at Ibis Biosciences. “Using DNA forensics to solve burglaries now enables law enforcement to cast a larger net. After all, there is a correlation—if someone breaks into houses, they are more likely to commit other crimes. And now we have the capability to catch those people.”

Samples taken from a crime scene can be of great value in identifying a perpetrator or ruling suspects out of an investigation (Forensic Science Service).

Quantitative Studies

Dr. Hofstadler noted that, as technology evolves, the applications broaden. He presented his group’s recent results on a DNA forensics platform based on fully automated high-throughput electrospray ionization mass spectrometry (ESI-MS). “The approach is based on using ESI-MS to weigh DNA forensic markers with enough accuracy to yield an unambiguous base composition, or the number of As, Gs, Ts, and Cs,” said Dr. Hofstadler. “This, in turn, can be used to derive a DNA profile for an individual.”

This is important, according to Dr. Hofstadler, because base composition profiles can be referenced in existing forensics databases derived from a mitochondrial DNA (mtDNA) sequence or short tandem repeat (STR) profiles. “With mtDNA typing, the ESI-MS approach facilitates the analysis of samples containing sequence and length heteroplasmy in the HV1, HV2, and HV3 regions without degradation of information,” he added. “In fact, the method captures the extent and type of heteroplasmy in situations that are not amenable to sequencing.”

Using the base compositions derived from a 24-primer pair tiling panel that covers nucleotide positions 15924-16428 and 31-576 as a basis, Dr. Hofstadler demonstrated that this approach has better resolution than traditional sequencing methods. “Because of the quantitative nature of ESI-MS, mixtures of different mtDNA types can be detected and resolved into distinctive components,” he pointed out. “Sometimes mixtures of mtDNA types analyzed by sequencing lead to uninterpretable results; analysis through ESI-MS yields quantitative data that maximizes the informative value of evidence.”

According to Dr. Hofstadler, this platform has a number of other applications, which include clinical and epidemiology work, as well as work on biodefense. “It’s all about amplifying DNA, weighing it, and using it to get base pair signatures, and it’s not limited to forensics work. We’ve performed a number of blinded validation studies with FBI and National Institute of Standards and Technology to evaluate the platform for mtDNA and STR typing. And it offers distinct advantages over the conventional approach.”

On the STR side, the MS-based method picks up single nucleotide polymorphisms (SNPs) with STR regions that conventional electrophoretic analyses won’t detect. “SNPs show great promise as a next generation of forensic markers,” said Dr. Hofstadler. “One of the drawbacks right now is that the fragments are not large enough to measure markers. On the up side, they are easy markers to amplify.”

Coping with Data Bottleneck

The continuing improvement of the sensitivity of extraction and amplification techniques has led to an increased amount of information available, owing to the increased likelihood of recovering DNA profiles from degraded and/or extremely small samples.

One of the few drawbacks of high throughput—if it can be called a drawback—is the mountain of data that it generates. “Greater automation is a big trend in the field,” said Mike Cariola, svp, forensic operations at The Bode Technology Group.

“We’ve noticed that, as we’ve developed automation to process samples in the lab, the bottleneck has shifted to data analysis—and that has become the new bottleneck of casework.”

Bode provides high-throughput DNA testing services, casework analysis, missing person identification, private and CODIS databanking of convicted offenders or arrestees, as well as paternity and nonforensic identification. “We do collections from crime scenes, handling about 6,000 cases a year, and about 100–200 thousand convicted offenders samples,” said Cariola.

“There are six million files in the U.S. database, and states are gradually taking on more of the processing. Although there is an increase in the number of players in this field, overall our numbers have generally increased, due in part to an increase in the number of backlog cases we take on, and also in part because of the global reach our company has.”

Cariola’s talk focused on the development of a forensic DNA case-management system to address these bottlenecks, as well as the increase in cold hits. He noted that the demands of the field test automation processes.

“We deal with very individual samples of varying sizes and quality,” he explained. “Degraded and challenged samples are a problem to a laboratory information management system (LIMS). You put in 2,000 database samples, you have one application. Two thousand forensic casework samples all need different amplifications, are of varying quality, and have individual requirements. None of the LIMS providers out there meet this need, so we made our own.”

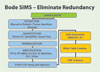

Bode developed Bode-SIMS, which assists in standardizing processes, to integrate with robots for the automation of processing, sample analysis, and management of samples. This product, customized to meet the varied needs of law-enforcement crime laboratories, leads to enhanced quality control and efficiency of forensic DNA sample processing.

“This is the template most organizations are working from—building better analysis capability into software,” said Cariola. “A number of talks at the conference were on the software side, and one of the big trends we see emerging in casework is automating the process to interpret mixtures. And when you have complications in the sample, it’s a challenge for analysts to interpret that mixture.”

The Bode Technology Group introduced Bode-SIMS, which assists in standardizing processes, to integrate with robots for the automation of processing, sample analysis, and management of samples.

Building a Better Algorithm

Advances in automated mixture interpretation has been a key area of focus in the field by dint of necessity. Since higher throughput has generated more data, the pressure is on the human analyst to interpret the data as quickly as possible—hence the bottleneck. Martin Bill, scientific manager of Forensic Science Service, and his team are looking to alleviate that problem with new algorithms and software technologies that enable automated processing of this information.

“The current search/interpretation approach requires DNA analysts to make decisions based on the presence or lack of certain alleles within a given profile,” said Bill. “DNA is visualized as a signal, and the role of the scientist is to translate that signal into a simple set of numbers that defines the DNA profile. The unnecessary use of binary decisions during the analysis of DNA profiles is not only wasteful, but susceptible to errors.”

The challenges are not insubstantial. The binary approach requires the scientist to make an absolute decision on something that is not absolute. Bill’s group has developed a continuous DNA-interpretation model that enables automated interpretation of complex DNA profiles, thus avoiding the need to make decisions in the early stages of the search and interpretation processes.

“It allows for improved precision and accuracy of databases for these particular profiles and permits a meaningful assignment of the evidential value of complex DNA evidence,” said Bill.

The algorithm uses the probabilities to drive the databasing and evidential interpretation—and this further standardizes DNA interpretation. It can also be used to improve precision and accuracy of database searching, increase the percentage of data suitable for databasing, and improve court assessment.

Bill presented some case studies of this algorithm in action. “The technology has come a long way—the degree to which we can get a signal from a very small or degraded sample has increased tremendously,” he reported. “However, we’re at the point where we need to improve the ability to interpret those signals, and we’re in a really good position to create those solutions. There is still more we can do to improve interpretation, and our group is working on an advanced theory for reading low level and compromised data. Our current portfolio is excellent, but this is not the endpoint. We are still looking for ways to do it better, faster, and cheaper.”

Automation Rules

Everyone agrees that automation has a big role to play in DNA forensics, and there needs to be more of it downstream as sample-processing throughput increases. Life Technologies’ GeneMapper® ID-X software offers an automated approach for the deconvolution of DNA mixtures, according to Dr. Klevan.

“There are many challenges for sample analysis in the field, but the one we specifically talked about in this presentation was what happens in instances in which there is more that one contributor to the sample,” he said. “The GeneMapper ID-X software streamlines the interpretation of profiles from mixed DNA samples by automating functions that would otherwise be performed manually.”

Dr. Klevan reported that, while standardization of practices in the field is starting to evolve, there still exists significant variation in the procedures for evaluating DNA mixtures between laboratories. Consequently, two different labs can interpret the results of the data slightly differently. “GeneMapper ID-X allows you to segregate the samples based on the minimum number of contributors. It deconvolutes two-person mixtures into contributor genotypes, and provides statistical frequencies.”

The approach to deconvoluting two-person mixtures into individual profiles uses two key inferences. First, at any locus, two alleles originating from the same person have roughly the same height; and, second, established mixture proportions remain consistent across all loci within a set profile. “The approach results in a set of genotype combinations that are scored across the profile and heterozygote peak-height ratio,” Dr. Klevan added.

Multiple-Capillary Systems

As breakthroughs in reagent chemistries increase the ability to detect trace amounts of DNA, Promega is pushing the limits of available instrumentation. Recently, some of Promega’s PowerPlex 16 and PowerPlex ES System users started to notice a split, or n-1 peak, at the wVA locus, which was affecting the ability of some labs to interpret their data. This only occurred on the 4 and 16 capillary electrophoresis platform, not on single capillary systems and was more prevalent in customer labs during the winter when lab temperatures could dip.

The issue was traced to an insufficiently denaturing environment in the exposed portion of the capillary outside of the heating oven on the multicapillary instruments. This allowed reannealing of the unlabeled unincorporated vWA primer to the 3´ end of the tetramethylrhodomine (TMR)-labeled strand of the vWA amplicon, following electrokinetic injection. The partially double-stranded DNA migrated faster than the single-stranded amplicon and this mobility shift manifested itself as the split or n-1 peak.

“We were in a situation where the split peak artifact was being caused by an instrumentation-related issue, but not being able to resign the instrumentation we had to come up with a work around with the chemistry” said Bob McLaren, Ph.D., senior R & D scientist. Dr. McLaren and his team found that, stretching out the pre-run time would keep this reannealing from occurring, presumably by increasing the temperature in the exposed portion of the capillary array.

“The split would go away, but increasing the pre-run time wasn’t really enough to solve the problem,” Dr. McLaren noted. “What we eventually ended up doing was incorporating into the Internal Lane Standard 600 (ILS600) a sacrificial hybridization sequence oligonucleotide that is complementary to the vWA primer. This preferentially hybridizes to the vWA primer and thus prevents the primer from hybridizing to the 3´ end of the TMR-labeled strand of the vWA amplicon.”

Users also noted an n-10/n-18 artifact at the vWA locus in the same STR multiplexes. Again, the root cause turned out to be an inability for already denatured DNA to be maintained in a denatured state on the exposed portion of the capillary array. In this case, the n-10/n-18 artifact was actually double-stranded vWA amplicon. In a similar fashion to the split peak solution, two complementary oligo targets (COT1 and COT2) were added into the ILS600, which preferentially annealed to the unlabeled strand of the vWA strand, thereby preventing it from annealing to the TMR-labeled strand of the vWA amplicon.

“The presence of these three oligonucleotides effectively eliminates both the split peak and the n-10/n-18 artifacts in both products without affecting sizing of alleles at the vWA locus—or any other locus,” Dr. McLaren explained. “The users found the problem, which turned out to be instrument related, and we went the extra mile to find a solution.”