September 1, 2009 (Vol. 29, No. 15)

Now Found in Almost Every Kind of Lab, It Is Beginning to Supplant Older Technologies

In 2005, two landmark papers describing novel cycle-array sequencing methods ushered in a new era in genetics. Known at the time as next-generation sequencing (NGS), these methods are now more commonly described as second-generation sequencing.

Although initially expected to supplant Sanger sequencing, NGS technologies have done an end run around Sanger and are instead encroaching on technologies like the DNA microarray, or staking a claim on new fields like metagenomics.

Compared to conventional Sanger sequencing, second-generation sequencing has several advantages. One is a streamlined workflow that eliminates transformation and colony picking—major bottlenecks in the process. Another is mind-bogglingly massive parallelism. Array-based sequencing can theoretically capture hundreds of millions of sequences in parallel. These changes have dramatically reduced the cost of sequencing from about $0.50 per kilobase to as little as $0.001 per kilobase. For read length and sheer accuracy, however, Sanger sequencing still rules.

With 30 years of technology development behind it, a Sanger system can produce read lengths of 1,000 bp, with nearly perfect accuracy, whereas second-generation challengers tend to achieve read lengths of less than 100 bp, with roughly ten times the number of inaccurate base calls.

Second-generation sequencing technologies are still very much on the steep portion of the development curve, so improvements in accuracy and read length can be expected on a regular basis for years to come. In the meantime, however, second-generation sequencing has been embraced for myriad applications in which expensive Sanger sequencing would be out of the question.

At CHI’s “Exploring Next Generation Sequencing” meeting to be held later this month, a number of speakers are slated to present real-world next-generation sequencing results—a blossoming of the technology pioneered just four years ago.

Fishing for RNA Editing Sites

Representing the Church Laboratory at the Harvard Medical School, Jin Billy Li, Ph.D., will present recent studies using the Illumina sequencing platform to do targeted sequencing of RNA editing sites. Targeted sequencing is an increasingly popular practice to further reduce the costs associated with sequencing. Rather than sequencing a whole genome or a whole library, targeted pieces are selected based on the nature of the study.

In this case, Dr. Li and his colleagues are studying adenosine-to-inosine RNA editing (inosine reads as guanosine), which diversifies the human transcriptome and has been implicated in brain function. Tissue from the cerebellum, frontal lobe, corpus callosum, diencephalon, small intestine, kidney, and adrenal gland from a single individual contributed the cDNA and gDNA for the study.

Screening more than 36,000 computationally predicted A-to-I sites, obtaining 57.5 million reads, they identified 500 new editing sites. “We have moved the RNA editing field forward a lot,” Dr. Li notes. “We’re moving from 20 or 30 sites to hundreds of new editing sites. We’re very excited with that piece of work.”

Previous efforts at identifying RNA editing sites have focused on conserved regions of the genome. Dr. Li’s study differs in that it used an unbiased approach, including conserved and unconserved locations enriched with RNA editing sites. Their results confirm previous observations that the primate lineage is enriched with RNA editing sites. Access to second-generation sequencing technology allowed this group of scientists to cast an extremely wide net, picking up 50 times as many instances of RNA editing as had ever been found before.

Tracking Bacterial Transcriptomes

Bias is an important consideration in many types of genetic studies. Hybridization-based techniques suffer from bias because of differences in hybridization efficiencies of oligonucleotides. When Nicholas Bergman, Ph.D., assistant professor in the school of biology at Georgia Institute of Technology, wanted to study bacterial gene expression, he wanted to look at the bacterial transcriptome in an unbiased way. That led him in the direction of second-generation sequencng, rather than the conventional DNA microarray.

Hundreds of bacterial genomes have been sequenced by now, and more genomes are under way. The large body of data, however, does not reveal much information about how the genes are expressed, how they are regulated, or how they are linked.

Dr. Bergman used second-generation sequencing technologies—Solexa from Illumina and SOLiD from Applied Biosystems, a division of Life Technologies—to probe the functions and relationships of the Bacillus anthracis transcriptome.

“We don’t know where those operon boundaries lie,” he says. “It’s surprisingly difficult to predict, we would like to understand that.” The result was a high-resolution map of the gene families within the bacterial genome. “Right off the bat, we could see transcript structure.”

As in the case of Dr. Li’s work with RNA editing, the second-generation sequencing technology enabled Dr. Bergman and his colleagues to make a significant leap forward in the field of bacterial transcriptomics. Previously, the best-mapped bacterial transcriptome was E. coli with 1.5% of transcripts mapped. In a single experiment, the Georgia Institute of Technology group captured 60% of transcripts from B. anthracis.

Dr. Bergman used a standard microarray approach to validate the experiment, with closely corresponding results. “For the most part they matched extremely closely, and they differ really only in instances where the arrays are not able to make an accurate measurement.”

Although it is a major accomplishment to be able to map the majority of transcripts in a bacterial genome, another significant aspect of this research is what it means for the DNA microarray. “The place I can speak in the most informed way is gene-expression analysis by sequencing. On that topic, I don’t see a long-term future for microarrays. The only thing holding us back is cost. Costs have been coming down steadily.”

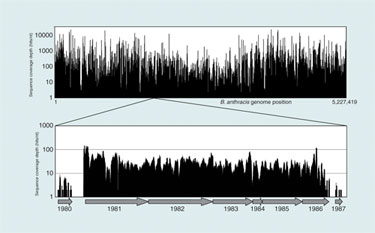

Georgia Institute of Technology researchers are studying the sequence coverage across the B. anthracis chromosome from an RNA-Seq experiment.

Probing Mutations in Cancer

At the Broad Institute, researchers are looking for genetic causes of glioblastoma. Stacey Gabriel, Ph.D., director of the genome sequence and analysis program, will be presenting the results of their search using next-generation sequencing methods. The goal is to identify different kinds of genetic changes that may cause the cancer, such as point mutations, structural rearrangements, and other events in the genome.

In the past, a variety of methods would also have been used, including microarrays and conventional sequencing. Second-generation sequencing offers the benefit of addressing many questions in a single experiment. “There are things we can find with sequencing that we wouldn’t have found before,” Dr. Gabriel says, adding, “we’ve found rearrangements in the glioblastomas, for example, that we weren’t able to see on the SNP arrays. There are also interesting transformations of some genes that were new to us. In ovarian cancer we’re finding, using a combination of point mutations and structural rearrangements, new pathways important in the cancer.”

One of the key strategies of their approach is targeting only the coding portion of the genome, which is just 1–2% of the overall genome. They utilize Solexa, using a method invented at the Broad Institute, and work in collaboration with Agilent Technologies. “We call it hybrid selection. It’s a technology that uses long oligos that Agilent synthesizes on arrays. The long oligos are cleaved off the arrays and capture corresponding parts of the human genome. We’re able to isolate the part that gets captured and sequence that.”

The most powerful of these is a set of all human exons—from all 20,000 human genes. That allows the scientists to target every gene in one experiment. “The power of using next-generation sequencing and the richness of the information that we’re getting now, in an almost routine way, is impressive,” adds Dr. Gabriel. “There’s still a long way to go to really work out the best and most sensitive and specific analysis. I think that some of the errors that are created in this data are still poorly understood. One of the main challenges right now is increasing the accuracy of our interpretation of data.”

Matthew Ferber, Ph.D., is a codirector of the clinical molecular genetics laboratory at Mayo Clinic. His group is working on hereditary colon cancer. They start with 22 colon cancer genes on a Roche NimbleGen (Roche Applied Science) capture array, then elute the DNA and submit it for second-generation sequencing on the 454 and Solexa platforms.

The goal of this initial experiment was to compare the two platforms to see which is most useful for diagnostic purposes. “Each company right now has its strengths and weaknesses,” Dr. Ferber explains. Ultimately, the comparison seems pretty much a wash, and Dr. Ferber has not yet made a final decision.

“At the end of the day, the answer no clinical lab wants to hear is, you’ll have to sequence with both platforms to get a clear clinical picture. The economics of this for a clinical laboratory becomes untenable. There must be a convergence of the technology that allows for high throughput, short run time, long reads, all at an economical price.”

Second-generation sequencing has developed rapidly since its introduction in 2005. Now appearing in research, industrial, and clinical laboratories, it is finding new uses and new applications, and in some cases supplanting older technologies.