January 1, 2009 (Vol. 29, No. 1)

Elizabeth Lipp

Need for Extensive and Reliable Identification of Proteins Is Now Widely Recognized

Screening technologies for proteins are still gaining momentum, and as the discipline matures scientists are wondering what technologies are best suited for proteomic profiling. “There’s also a lot of discussion about quantification methodology—what is the best and most efficient way to get the results you need,” notes Chris Becker, executive director of PPD.

Additionally, there have been concerns about the maturity of the different proteomic technologies. “Proteomics is a hyped area,” says Christer Wingren, Ph.D., associate professor at the Lund University. “But it’s starting to become a mature platform based on what you can do in clinical and profile studies.”

In order to address advances in the screening arena, Select Biosciences is hosting three meetings in Berlin next month. A few of the presenters at the “Screening” meeting spoke to GEN before the conferences to explain where protein profiling stands today.

The Swedish Human Proteome Resource program (HPR) was developed to allow for a systematic exploration of the human proteome using antibody-based proteomics. This research combines high-throughput generation of affinity-purified (mono-specific) antibodies with protein profiling in a multitude of tissue/cell types assembled in tissue microarrays.

“The program hosts the Human Protein Atlas portal with expression profiles of human proteins in tissues and cells,” explains Frederik Ponten, M.D., Ph.D., department of genetics and pathology, Uppsala University.

Dr. Ponten’s presentation will describe his group’s multidisciplinary research program, which will allow for systematic exploration of the human proteome using antibody-based tissue proteomics, combining high-throughput generation of mono-specific antibodies (affinity-purified) with protein profiling in human tissues and cells using tissue microarrays.

“We began this project five years ago on two different sites, Stockholm and Uppsala. Eighty researchers are working on this full time,” notes Dr. Ponten. “We are in a steady state, averaging 20 antibodies a day; 50 percent are approved and work well enough to do protein profiling. It actually boils down to ten antibodies a day or 3,000 new protein profiles a year.

“A new version of the Human Protein Atlas is released on an annual basis, and the current version contains protein profiles for over 6,000 antibodies representing 5,000 unique human proteins corresponding to 25 percent of the human genome.”

Dr. Ponten’s group is concentrating on finding predictive biomarkers for cancer, with a focus on major human cancer types including breast, colorectal, prostate, and lung cancer in particular.

“I hope people recognize us as a resource for protein-related research,” remarks Dr. Ponten. “We need to get the word out that we’re here. The effort is fairly well known in the proteomics community, but in the cancer field, people need to know that we are a resource that can be utilized for various research projects.”

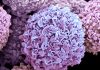

Multicolor labeling of breast carcinoma cells for high-content screening

Targeting for Tumor Management

Oncologic applications loom large on the proteomic profiling landscape. Another group will specifically present on Kv10.1 (Eag1) as a target for tumor management. Luis Pardo, M.D., Ph.D., group leader, Max-Planck Institute for Experimental Medicine, says that he will describe different tumor theranostics approaches, taking advantage of the selective expression profile of Eag1 channels in tumors together with its role in tumor biology.

Dr. Pardo’s group has been working on a particular ion channel in tumor cells that is virtually not expressed outside of the brain. “This is a selective marker for tumor cells—normal cells expressing it are protected by the blood-brain barrier,” he explains. “It allows an aggressive therapy with low side effects. We have developed some blocking antibodies for the channel in animal models. Side effects are low, but the treatment is not as effective as we would like. We are developing combinations between the antibody and cytotoxic compounds.”

Dr. Pardo adds that his group’s approach is standard, “but what is unique about what we are doing is the target. Up until now, tumor markers are generally specific for certain tumor types—this has a wide range in tumor types; one is not restricted to specific tumor types. It also has the advantage of dealing with intact cells. You don’t have to get into the cell—it’s on the surface.”

Screening against individual therapeutic proteins can identify hit compounds. Wayne Bowen, Ph.D., CSO at TTP LabTech, notes that a systems biology approach is more ideally suited for target identification and mechanism of action studies, and that high-content methodologies are addressing this need in a higher-throughput environment.

“We are part of a large group, The Technology Partnership. It’s a pretty special setup; we sell both instrumentation and development consultancy, although we are a bit different in our approach,” points out Dr. Bowen. “We provide novel tools to our clients, who dictate which instrumentation comes to the market.”

In his presentation, Dr. Bowen will describe Acumen eX3’s laser-scanning approach, which enables the scanning of entire wells, cell by cell, ideal for analysis of large objects and improved assay robustness, he says. Customer-driven software applies cytometric principles rather than image analysis, making for less labor-intensive screening with a broad range of high content assays.

“We work in large area screening—20 mm x 20 mm is a large enough area to simultaneously scan four wells in a 96-well plate,” he says. “What Acumen offers is a wide field-of-view, rapidly reporting high-content data for every cell in every well.”

Dr. Bowen notes that this technology still has restricted use despite its broad applicability. “The Acumen is used for sustained 24/7 high-content screening, essentially highly automated analysis of lots of plates with little user intervention. The instrument offers high-throughput analysis and gives you data for all cells. People make mistakes, and so do robots. If you can count all cells in each well, you can normalize the data. Whole-well scanning thus adds to the robustness of the data.”

Dr. Bowen describes one instance where speed was key. “In seven days, we were able to scan 18,000 genes twice using RNAi profiling, and able to find 1,000 interesting genes. The secondary confirmation screen was done in three hours. I received 13 384-well plates at 9:00 am and had them read by 12:15 pm. This kind of follow-up allows you to be successful, fast.”

According to Dr. Bowen, producing high volumes of data can be counterproductive. “We process terabytes of information, but throw it away as soon as the high-content parameters have been determined. There is no requirement for a long-term data-storage solution. You develop and validate an assay and run with it. A key point is that researchers now want to screen at higher throughput. Why is high-content analysis not used as much? Not every technology is directly transferable. They don’t last or can’t be used in every instance.”

A scanned antibody microarray, a close-up of that microarray, and then yet another close-up, this one showing a dispensed scFv antibody that has captured a labeled analyte.

Recombinant Antibody Microarrays

High-content screening continues to be a hot topic, and there are a number of methods currently under advanced development in proteome screening. Dr. Wingren will talk about his lab’s high-content proteome screening methodology, which is based on recombinant antibody microarrays.

“We are on an ongoing track to improve the performance of our technology platform, and are using a human recombinant scFv antibody library, microarray adapted by molecular design, optimal for this particular application as probe source,” he says. “Our in-house library is composed of 210 of antibodies—so, from a logistic point of view, we have an antibody for almost every antigen at hand, all we have to do is to select for it. In fact, we have set up and optimized a complete in-house antibody microarray facility, providing key advantages and readily allowing us to perform various clinical studies, initially focusing on oncoproteomics.”

The case studies Dr. Wingren will review involve two applications, presented using systemic lupus erythematosus (SLE) and pre-eclampsia. “Both of these discovery projects have generated interesting data based on blood-based analysis.” He notes that the SLE candidate biomarker signature(s) are particularly promising.

“It would be really exciting to see if we could validate our findings in a second clinical study. Our initial findings are good, but a larger, independent follow-up study is required. Currently, there are no blood-based screening tests for either SLE or pre-eclampsia.”

Our pre-eclampsia study is a first-line study at an early stage, but still we have discovered some tentative serum biomarker signatures that, of course, need to be followed up and validated in larger, independent studies using a novel set of clinical samples,” Dr. Wingren says. Both of these studies have been performed in close collaboration with clinicians, again indicating the need of adopting cross-disciplinary efforts within clinical proteomics efforts.

“I want to emphasize that we have an in-house designed antibody microarray technology platform that readily can target crude, nonfractionated proteomes with high specificity and sensitivity,” he adds. “You can do this in a truly multiplexed fashion, screening several hundreds analyses at the same time, while consuming only minute amounts of the sample. From a clinical point, we have to find candidate signatures for SLE and pre-eclampsia to enable and improve disease diagnostics, patient stratification, and monitoring of these patients.”

Collaborations: Better Research

Using well-established techniques in novel ways is PPD’s forte, notes Becker. “Part of the paradigm of the industry involves profiling for the discovery phase and then developing those results,” he says. “You can have any number of samples, from 20 to 500. How do you choose which markers you are going to pursue for validation by a method such as multiple reaction monitoring? And there is much discussion about different methods of quantification. What’s the best way?”

Regarding quantification, PPD participated, along with other laboratories, in a study done by The Association of Biomolecular Resource Facilities (ABRF), which does yearly blind studies on methods, materials, and standards in its ongoing Quantitative Proteomics Study.

“They did a blind test in 2007—a study of a matrix of E. coli lysate with spiked unknowns at varying concentrations and 36 labs responded,” says Becker. “When comparing methods, label-free did very well, followed by iTRAQ. But even with these proven methods, many responders failed. That means that a lot of people out there are still in the learning phase.”

Becker says that the ABRF study fueled several email exchanges; one participant observed that no one has ever done a label-free whole proteome study. “But we have. We were very careful, and did a replicate study before publishing. Not everyone in the proteomics field realizes this has been done. We published this study in the Journal of Clinical Oncology. Sometimes, it’s thought that if a study is not published in a proteomics journal, it doesn’t exist.”

Proteomics is gaining ground in clinical applications, a little at a time, Becker explains. “The field is making progress after a rough start where some researchers wanted to use signatures of signals without understanding the identification of the underlying proteins. But the field has been maturing, and there is now widespread recognition that results with extensive and reliable protein identifications are essential.”