June 15, 2015 (Vol. 35, No. 12)

Sean Muthian Ph.D. Director of Strategic Marketing and Collaborations Sigma-Aldrich

Must Reproducibility Be Such an Elusive Goal in Translational Research?

In 2012, the Organisation for Economic Co-operation and Development countries invested about $59 billion in biomedical research—about 89% of which may have been wasted on science that cannot be reproduced. Are we looking at a $52.5 billion white elephant?

To keep to the well-trod path from fundamental discovery to life-changing medicines, researchers and developers have heeded scientific principle, which has abided for hundreds of years, even while technology has grown more sophisticated and collective knowledge has expanded. The exacting peer-review process helps to share the knowledge gathered, and also provides validation for the findings.

Most experiments are not reproduced.There is great conviction that methodologies, reagents, and models have been used appropriately. Published results show well-structured statistical analyses with impressive P-values. And the peer-review process ensures that other scientists have given a stamp of approval. Scientific principle also ensures that any imperfections are ironed out over time and that a solid theory emerges.

What if the story was far more complex? What if the accepted scientific principle fell short of its promise? Unfortunately, there are signs that this may be the case, that publication of results does not ensure the statistical or experimental rigor that we would expect.

In reality, many scientists have experienced issues when reproducing results. We need look no further than the failure of drugs in clinical trials. John Arrowsmith, science director at Thomson Reuters Life Sciences Consulting, publishes regular updates on the failures of drugs in different stages of clinical trials. His latest analysis,1 which looked at 2008–2010, showed a stark reduction in the success of Phase II trials (from 28% to 18%).

This is a very expensive disconnect, and one that is essential to look at, especially from the perspective of reproducibility. In this article, we consider where there are areas for introspection and action at all levels of science, from the bench to the broader scientific community. In a second article, Copy Me If You Can, Part 2, exclusively online, we look at some practical solutions that can have a big impact.

Surely Reproducibility Is Not So Difficult to Achieve?

The reproducibility question was considered by the scientists of the target identification and validation group at the pharmaceutical firm Bayer Healthcare. This group, which was led by Khusru Asadullah, Ph.D., produced findings that were in broad alignment with the prevailing “gut feeling,” but still surprising.2 Only 20–25% of published data could be reproduced, and roughly 65% showed inconsistencies that in reality produced validation delays and project discontinuations. When the group looked at confounding factors—such as the journal impact factor, the number of publications, and the number of independent research groups—none of these showed any correlation with the results.

Similar findings were reported by Amgen’s C. Glenn Begley, Ph.D., and MD Anderson Cancer Center’s Lee M. Ellis, M.D. In fact, these investigators showed a potentially more worrying picture.3 In only 11% of cases could findings be confirmed. The scientists also looked at how irreproducible studies have initiated a stream of further research and secondary publications on the assumption that the original research was accurate. Some of these even resulted in clinical investigation programs, leading the authors to question whether some clinical trials exposed patients to regimens that probably wouldn’t work.

Steve Perrin, Ph.D., CEO and CSO, ALS Therapy Development Institute, looked at this topic from the perspective of animal models of disease.4 He highlighted that, even after animal models of human disease had shown the safety and efficacy of potential new therapies, over 80% subsequently failed in clinical trials. This has a significant failure cost—both financially and, more importantly, in terms of people submitted to unnecessary risk in clinical trials.

Although such startling numbers might point to a failure of the scientists involved, the problem is so widespread that its origins, causes, and solutions are presumably more substantial. There are some rare occasions where bad science has been performed or even completely fabricated. However, competing factors and pressures (such as the drive to “publish or perish”) highlight a far more complex situation. Fortunately, this also means there are various opportunities to improve upon the status quo.

In the second annual State of Translational Science Research survey completed by Sigma-Aldrich,5 364 translational researchers from around the globe provided insights into irreproducibility. These not only mirrored much of the general sentiment expressed above, but also provided a lens on the areas where the bench scientists can implement changes.

The survey asked whether irreproducibility is a major barrier to successful research, and 61% of respondents said “yes,” 26% said “no,” and 13% said “unsure.” Scientists were also asked to consider the role that publishers, funding sources, and institutes play. For example, when survey participants were asked whether journals had taken sufficient steps to ensure reproducibility, the “yes,” “no,” and “unsure” responses were 26%, 47%, and 27%, respectively. Similarly, when survey participants were asked whether their institutions had set explicit and stringent policies in this area, the “yes,” “no,” and “unsure” responses were 49%, 40%, and 12%, respectively.

Grant bodies have varying levels of requirements around reproducibility; however, 54% of respondents either lacked or were unaware of any specific requirements in their grants. Whether grant bodies could support the additional cost of performing appropriate checks is a moot point; some do require such efforts, but appear to rarely check.

Reproducibility appears to be less of a problem when researchers attempt to repeat their own laboratory’s experiments (77% said they had done so in the last year), when compared to research from other laboratories (22% said they had, 31% said they hadn’t, and 47% said they were unsure).

The explanations of this discrepancy could be straightforward, for example, insufficient methods/protocols sections or lack of contact with the original authors to confirm specifics. They could also be much deeper, such as bias or differing standards for success. Respondents mentioned that barriers also included lack of access to the right equipment, or that the original research was so cutting edge that they lacked expertise.

Interestingly, 71% of respondents had not read any of the three commentaries referenced in this article.2–4 Nonetheless, the respondents seemed familiar the irreproducibility issue, which was central to the commentaries.

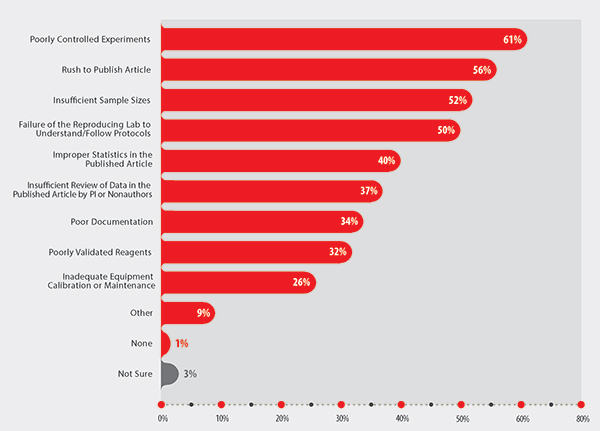

For example, when respondents were asked to cite the causes of irreproducibility, they provided a list that echoed the concerns from the three aforementioned publications. Key amongst these were poor experimental control (61%), rush to publish (56%), and insufficient sample size (52%) (see Figure 1 for all of the perceived causes of irreproducibility).

Figure 1. Primary causes of irreproducibility cited by researchers who participated in Sigma-Aldrich’s Second Annual State of Translational Research: 2014 Survey Report.5

Inadequate Statistical Treatments

The appropriateness of the statistics used was also highlighted as a problem, with 40% of respondents noting that improper statistics were a cause of irreproducibility. Incidentally, incorrect or inappropriate statistical analyses or insufficient sample sizes were highlighted in the commentary published by the Bayer Healthcare researchers.2

John P. A. Ioannidis, M.D., Ph.D., professor of health research and policy at Stanford School of Medicine, published a provocatively titled report, “Why Most Published Research Findings Are False,” in which he outlined that, for most study designs and settings, it is more likely that a “claim” is false than true.6 He highlights that the likelihood of a “true” result is reduced when studies are small, when the magnitudes of the effects seen are small, when there are a greater number of tested relationships with less pre-selection, as well as a number of other important factors, including researcher bias.

Regina Nuzzo, associate professor, Gallaudet University, points out that the reliance on the much-lauded P-value to show statistical validity is not only a statistical error in itself, but probably also a source of great bias.7

Retraction Awareness

There is also the pressing issue of publication retractions. The impact of such retractions was discussed in a comprehensive survey completed by Michael L. Grieneisen, Ph.D., and Minghua Zhang, Ph.D., both of the Department of Land, Air and Water Resources of the University of California, Davis. These scientists found that the rate of retractions between 2001 and 2010 increased by a factor of 11.36.8 Yet, it is clear that many of these papers continued to receive citations, partly because the mechanisms used to highlight retractions are neither applied consistently nor currently integrated fully with bibliography systems or online databases.

The Sigma-Aldrich survey also noted that nearly 40% of scientists rarely (26%) or never (11%) checked for retractions, with only 34% always checking.5 Another point to consider is that this only looks at papers that are actually retracted, a process that requires considerable effort, leaving other questionable papers to circulate freely. If this point were combined with Dr. Ioannidis’ contention that most published findings are false,6 one might conclude that scientists should reconsider whether their trust in the peer-review process is justified.

On the surface, this may look like a rather worrying picture, but a lack of reproducibility doesn’t necessarily mean that the data are incorrect, or that the process is broken. Rather than signalling an end to the trustworthiness of the scientific principle, we are looking at a critical point to make individual and collective efforts to improve the outlook. In the online sequel to this article, we explore some practical steps that mitigate some of these issues.

Translational Implications of Irreproducibility

Lee M. Ellis, M.D.

The causes of irreproducibility are diverse, and range from sloppiness and selective reporting all the way to data fabrication. The implications of data irreproducibility are far reaching. This not only impacts the time, efforts, and funding of other investigators in other laboratories, but it impacts early-phase clinical trials. In other words, if we cannot rely on solid and honest reporting of data in preclinical studies (including reporting of negative data), how are we going to be able to translate this work to the clinic?

Developing a drug could literally take more than a decade and millions of dollars. But this is nothing compared to the importance of making sure that we give our patients the best opportunity to benefit from reliable research. I know that many journals now recognize this issue as does the NIH. But we must continue to have an open conversation about academic pressures, “impact factor mania” (an issue recently discussed by Casadevall and Fang in mBio), and the implications of irreproducible data.”

Lee M. Ellis, M.D., is professor of surgery, Department of Surgical Oncology, Division of Surgery, University of Texas MD Anderson Cancer Center, Houston, TX. He is also the vice chair of translational medicine, SWOG (formerly the Southwest Oncology Group), Portland, OR.

To continue reading the second half of this article, click here.

1 Arrowsmith, J. 2011. Nat. Rev. Drug Disc. 10: 328–329.

2 Prinz, F., et al. 2011. Nat. Rev. Drug Disc. 10: 712.

3 Begley, C.G. & Ellis L.M. 2012. Nature 483: 531–533.

4 Perrin, S. 2014. Nature 507: 423–425.

5 Sigma-Aldrich Corporation. 2014. Second Annual State of Translational Research 2014 Survey Report. Available at: www.sigma-aldrich.com/translational-survey. (Accessed 1 March 2015.)

6 Ioannidis, J.P.A. 2005. PLoS Med. 2(8): e124

Sean Muthian, Ph.D. ([email protected]), is director of strategic marketing and collaborations at Sigma-Aldrich.